MacAI

Giorgio Rossi

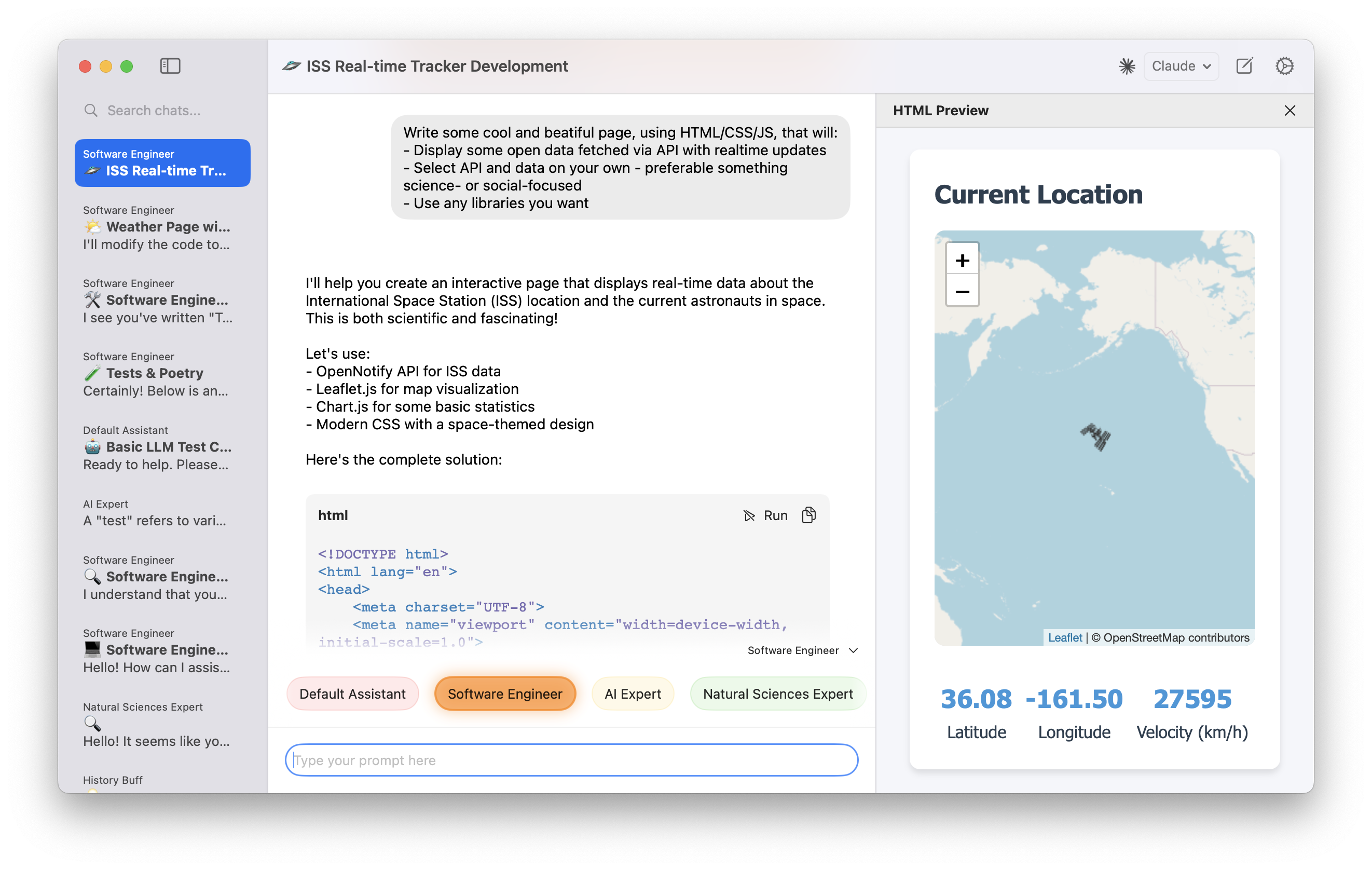

MacAI allows you to run a multitude of powerful open-source LLMs directly on your own Mac, privately and securely. It utilizes your local hardware to generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

User Sentiments

Top Likes

- Privacy focused - runs models locally.

- Supports many open-source LLMs.

- Easy to use interface.

- Active development and community.

Top Dislikes

- Resource intensive (requires a powerful Mac).

- Can be challenging to set up initially for some users.

- Documentation could be improved.

Popular Comments

Reddit User - u/Efficient_Ad_5331

2024-07-20

I'm loving MacAI. I've been using it for a few weeks now and it's so much faster than using online services, plus it's private. Highly recommended!

Product Hunt User - @arvidkahl

2024-02-01

MacAI makes running LLMs locally so easy. Great work!

Github User - @simonw

2023-12-15

This is a really promising project. Excited to see how it develops.

Reddit User - u/Competitive-Ad-9543

2024-05-03

Had some trouble getting it set up at first, but the Discord community was super helpful. Now it's working great!

Detailed Review

MacAI empowers users to harness the power of large language models (LLMs) without relying on cloud services, ensuring data privacy and potentially faster processing speeds, depending on the user's hardware. It simplifies the process of running complex open-source models like LLaMA, Alpaca, GPT4All, and others directly on a Mac. The user-friendly interface makes it accessible even to those without extensive technical expertise, though some initial setup and model downloading are required.

Standout Features

- Local Processing: Runs LLMs on your Mac, keeping your data private.

- Support for Multiple Models: Compatible with a wide range of open-source LLMs.

- User-Friendly Interface: Simplifies interaction with complex models.

- Active Community: Provides support and resources through Discord and GitHub.

Conclusion

MacAI is an excellent choice for users prioritizing privacy and local processing of LLMs. It offers a streamlined way to leverage powerful AI models without the need for cloud services. While some initial setup might be required, and a powerful Mac is recommended for optimal performance, the benefits of privacy and speed make it a compelling option for those who want to explore the world of local AI. The active community and ongoing development further enhance its value.

##END##